chevron_left

-

play_arrow

NGradio So good... like you

At an event where Google launched two new Pixel phones, showcased a Pixel smartwatch, and teased a Pixel tablet for next year, one element was conspicuously absent.

Throughout the event, there was no obligatory nod in the direction of Google’s other hardware partners—the Samsungs, Motorolas, and OnePluses that largely keep the Android ecosystem humming along. Their names never came up, and a large chunk of Google’s presentation covered features that will never be available on their phones.

Granted, the entire point of the event was for Google to showcase its own hardware, not to promote Android as a whole. Still, the keynote underscored how Google is now building an ecosystem of its own, one that supersedes Android and doesn’t rely on third-party device makers. After years of providing a platform for other companies’ hardware, Google is ready to go it alone.

NEW CHIP, NEW SKILLS

For the new Pixel 7 and Pixel 7 Pro, Google talked up its custom Tensor G2 processor as a major differentiator, enabling features not found on other Android phones (and, in some cases, not on the iPhone either).

Both phones for instance, employ some trickery to offer convincing digital zoom, creating composite images from two lenses when you’re at a zoom level in between them. The Pixel 7 Pro, meanwhile, can digitally zoom up to 30X from its 5X optical lens, partly by cropping down the lens’ 48-megapixel image—something we’ve seen other phone makers do as well—and partly by using AI to stabilize the viewfinder and remove noise.

The Pixel 7 is imbued with AI chops in lots of other ways. It can remove blur from existing photos—including those shot on other phones—and remove background noise from the person you’re talking to on the phone. Google’s even taking another stab a face recognition, using only the Pixel 7’s front camera instead of the radar sensors it used on the Pixel 4. (You’ll still need a fingerprint for apps that require biometric input, such as banking apps, but Google says the system can’t easily be fooled by a photo of your face—a common pitfall on other Android phones.)

As Google tells it, these kinds of features are only possible because of the Tensor chip, so it can only offer them by building its own phones.

A NEW FITNESS PLATFORM

Google’s ecosystem ambitions are also finally extending to smartwatches.

After working with Samsung to revamp its Wear OS platform, Google gave Samsung first dibs on releasing new Wear OS watches, starting with last year’s Galaxy Watch 4. In turn, Samsung pre-loaded the watch with its own Health app, Bixby assistant, and interface design.

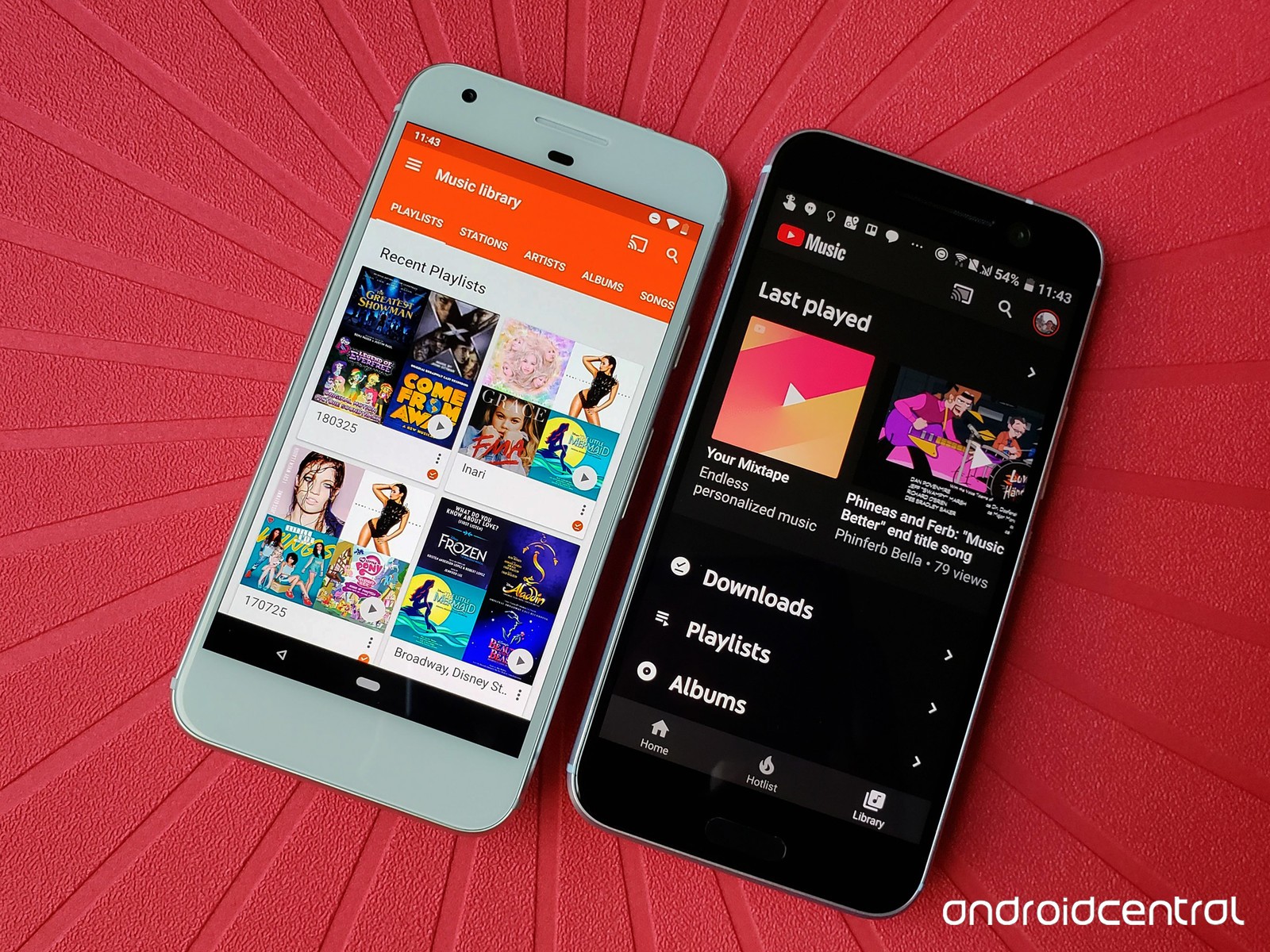

The Pixel Watch is more aligned with Google’s AI-driven vision. It uses Google Assistant, features the same “Material You” design language as the Pixel phones, and ships with plenty of Google apps, such as Maps, YouTube Music, and Google Pay.

More importantly, it uses Fitbit as the default app for tracking steps, heart rate, exercise, and sleep. (Google completed its acquisition of Fitbit last year.) Although Google offered a separate service called Google Fit on earlier Wear OS devices, the company may feel that Fitbit’s platform is better positioned to compete with Apple’s health and fitness offerings in the long run.

That makes the outlook a bit murky for other Wear OS watches, such as those made by Skagen, Mobvoi, and Tag Heuer. While they’re supposed to start getting Google’s revamped software this year, it’s unclear if they’ll get the same Fitbit integration. For Google, Fitbit’s services may be another way that it plans to make its own hardware stand out.

A NEW VISION FOR TABLETS

Alongside the newly-launched phone and watches, Google also gave a brief glimpse at the Pixel Tablet, which it plans to launch next year. While the tablet itself looks unremarkable, it does have one neat trick: It can magnetically attach to a speaker dock, effectively turning it into a smart display akin to the Nest Hub Max. That way, it can display photos, play music, and serve as a stationary smart home hub.

The tablet is partly an attempt to make up for past neglect. After doing little to improve the Android tablet experience over the years, Google declared last year that tablets are the “future of computing,” and it’s now trying to spark the category with interesting new hardware.

But the Pixel Tablet and its docking station also slide right into Google’s broader vision for ambient computing. The company has recognized a common problem with tablets—that we often leave them unused for days on end—and is solving it turning the tablet into an always-on touchpoint for the rest of the Google ecosystem.

MISSING PIECES

Toward the end of its event, Google tried to point out all the ways in which these pieces will fit together. You might, for instance, use a Pixel 7 to take a photo, a Pixel Tablet to edit that photo, and a Pixel Watch to turn that photo into a watch face. You might also use your watch to broadcast that you’re on the way home via Google Assistant, and have that message play out on Nest speakers around the house. The implicit message is that Apple isn’t alone in being able to combine hardware, software, and services.

Still, the Pixel lineup doesn’t quite feel complete yet. Its tablet, for one thing, won’t launch until 2023, and it’s unclear how heavy-duty computing will fit in amid rumors that Google has disbanded the team working on a Pixel laptop. We’ll also have to see how well all of this ecosystem integration works in practice, as Google has a long history of hatching grand ideas and failing to execute on them.

But at least Google has a clear vision of where it wants to take personal computing, and it’s a place where other hardware makers might not be needed anymore.

Source: fastcompany.com

Written by: New Generation Radio

Similar posts

ΔΗΜΟΦΙΛΗ ΑΡΘΡΑ

COPYRIGHT 2020. NGRADIO

Post comments (0)