So good... like you

Listeners:

Top listeners:

00:00

00:00

chevron_left

-

play_arrow

NGradio So good... like you

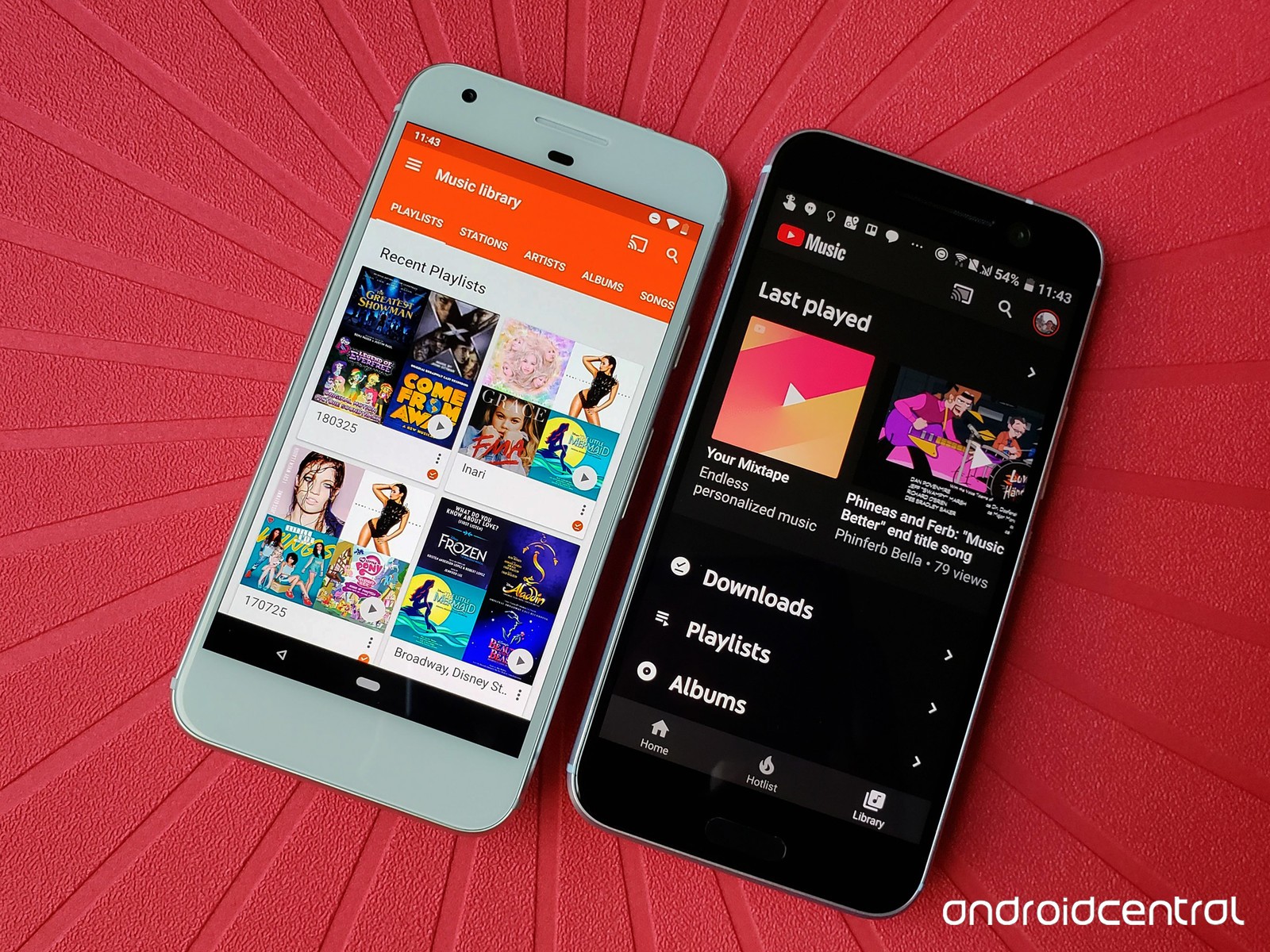

Better hardware and new AI smarts means this year’s Google phone is better with low-light photos while adding a new portrait mode.

What do you do to top Google’s highly regarded first-generation Pixel phone camera? The same, only more.

More HDR+ image processing. More chip power. More artificial intelligence. And more image stabilization. The result for photos: “All the fundamentals are improved,” said Tim Knight, head of Google’s Pixel camera engineering team. On top of that are new features including motion photos, face retouching and perhaps most important, portrait mode.

In the days of film, a photo was the product of a single release of the camera’s shutter. In the digital era, it’s as much the result of computer processing as old-school factors like lens quality.

It’s a strategy that plays to Google’s strengths. Knight hails from Lytro, a startup that tried to revolutionize photography with a new combination of lenses and software, and he works with Marc Levoy, who as a Stanford professor invented the term “computational photography.” It may sound like a bunch of technobabble, but all you really need to know is it really does produce a better photo.

It’s no wonder Google is investing so much time, energy and money into the Pixel camera. Photography is a crucial part of phones these days as we document our lives, share moments with our contacts and indulge our creativity. A phone with a bad camera is like a car with a bad engine — a deal-killer for many. Conversely, a better shooter can be the feature that gets you to finally upgrade to a new model.

Cameras are a big-enough deal to launch major ad campaigns like Apple’s “shot on iPhone” billboards and Google’s “#teampixel” response.

Your needs and preferences may vary, but my week of testing showed the Pixel 2to be a strong competitor and a significant step ahead of last year’s model. Be sure to check CNET’s full Pixel 2 review for the all of the details on the phone.

AI brains

Some of Google’s investment in camera technology takes the form of AI, which pervades just about everything Google does these days. The company won’t disclose all the areas the Pixel 2 camera uses machine learning and “neural network” technology that works something like human brains, but it’s at least used in setting photo exposure and portrait-mode focus.

Neural networks do their learning via lots of real-world data. A neural net that sees enough photographs labeled with “cat” or “bicycle” eventually learns to identify those objects, for example, even though the inner workings of the process aren’t the if-this-then-that sorts of algorithms humans can follow.

“It bothered me that I didn’t know what was inside the neural network,” said Levoy, who initially was a machine-learning skeptic. “I knew the algorithms to do things the old way. I’ve been beat down so completely and consistently by the success of machine learning” that now he’s a convert.

One thing Google didn’t add more of was actual cameras. Apple’s iPhone 8 Plus, Samsung’s Galaxy Note 8, and other flagship phones these days come with two cameras, but for now at least, Google concentrated its energy on making that single camera as good as possible.

“Everything you do is a tradeoff,” Knight said. Second cameras often aren’t as good in dim conditions as the primary camera, and they consume more power while taking up space that could be used for a battery. “We decided we could deliver a really compelling experience with a single camera.”

Google’s approach also means its single-lens camera can use portrait mode even with add-on phone-cam lenses from Moment and others.

Light from darkness

So what makes the Google Pixel 2 camera tick?

A key foundation is HDR+, a technology that deals with the age-old photography problem of dynamic range. A camera that can capture a high dynamic range (HDR) records details in the shadows without turning bright areas like somebody’s cheeks into distracting glare.

Google’s take on the problem starts by capturing up to 10 photos, all very underexposed so that bright areas like blue skies don’t wash out. It picks the best of the bunch, weeding out blurry ones, then combines the images to build up a properly lit image.

Compared to last year, Google went even farther down the HDR+ path. The raw frames are even darker on the Pixel 2. “We’re underexposing even more so we can get even more dynamic range,” Knight said.

Google also uses artificial intelligence to judge just how bright is right, Levoy said. Google trained its AI with many photos carefully labeled so the machine-learning system could figure out what’s best. “What exposure do you want for this sunset, that snow scene?” he said. “Those are important decisions.”

Stephen Shankland/CNET

HDR+ works better this year also because the Pixel 2 and its bigger Pixel 2 XLsibling add optical image stabilization (OIS). That means the camera tries to counteract camera shake by physically moving optical elements, That’s a sharp contrast to the first Pixel, which only uses software-based electronic image stabilization to try to un-wobble the phone.

With optical stabilization, the Pixel 2 phones get a better foundation for HDR. “With OIS, most of the frames are really sharp. When we choose which frames to combine, we have a large number of excellent frames,” Knight said.

Source: cnet.com

Written by: New Generation Radio

Similar posts

ΔΗΜΟΦΙΛΗ ΑΡΘΡΑ

COPYRIGHT 2020. NGRADIO

Post comments (0)