He notes that similar plans were debated in the 1990s regarding bits of computer software known as “intelligent agents” that were used to handle legal contracts. The plans were dropped though as “overkill,” says Schafer. “But there are certain things at the moment that, by default, only humans can do, which in the future we might allow machines to do as well.”

ESTABLISHING LIABILITY IS TOUGH ENOUGH

At any rate, “electronic personhood” is more of a sideshow than a serious consideration at this point — it’s legal liability for autonomous systems that’s the most important concern of the report. As a baseline, the report’s authors suggest that the EU draft legislation that makes it clear that people only have to establish “a causal link between the harmful behavior of the robot and the damage suffered by the injured party” to be able to claim compensation from a company.

This is intended to stop companies from shifting blame onto the autonomous systems themselves. So, for example, the makers of a self-driving car can’t claim they’re not responsible if it crashes just because it was driving itself at the time. Delvaux suggests this won’t be much of a problem in the short-term as the current generation of robots simply aren’t complex enough to make establishing causation difficult. “But when you have the next generation of robots which are self-learning, that is another question,” she says.

Schafer says: “The concern they are having is that robots as autonomous agents might become so unpredictable they interrupt the chain of causal attribution. And the company says: ‘That was not foreseeable for us and we couldn’t know our self-learning system would make the car start chasing pedestrians off the street.’”

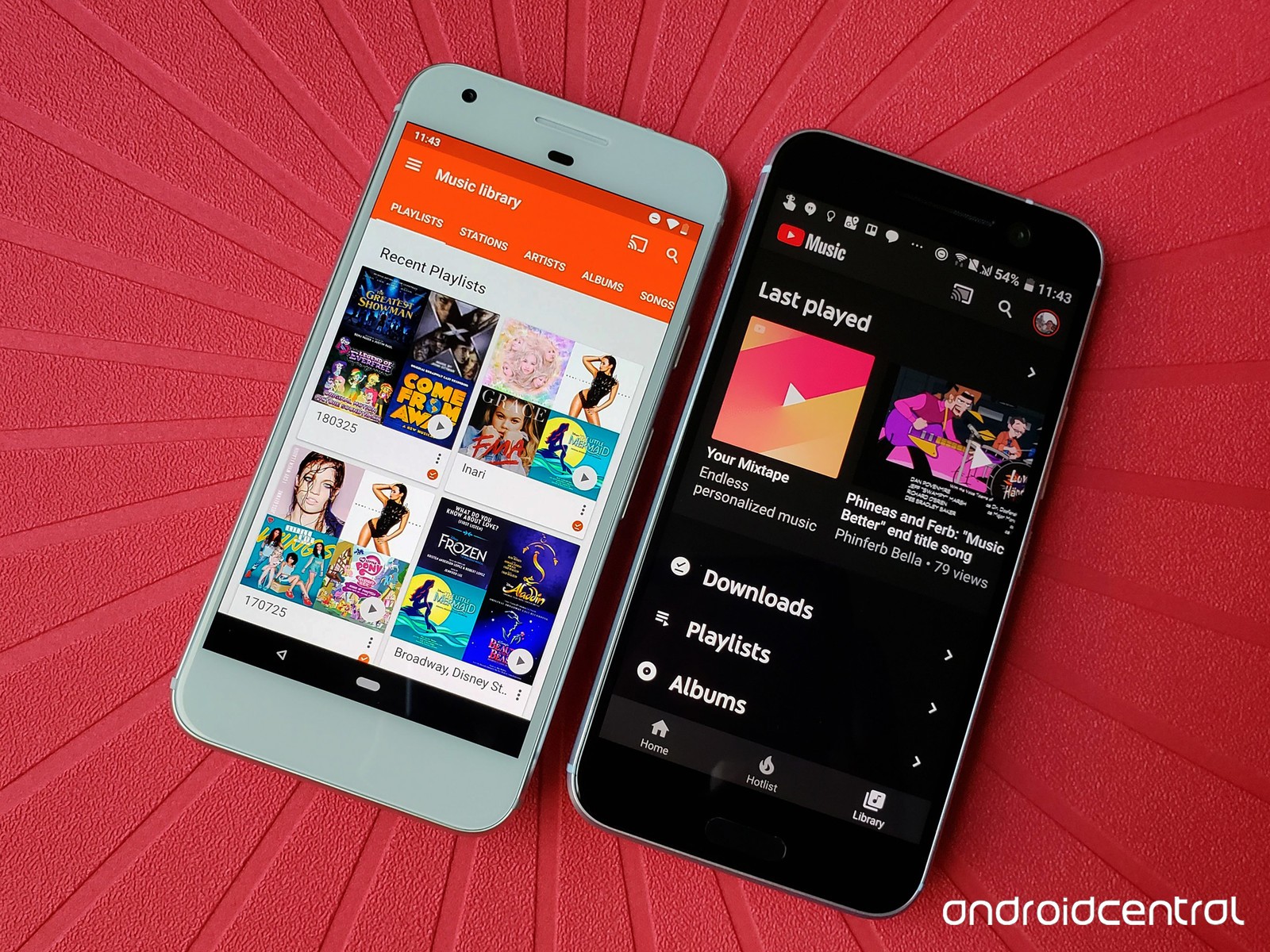

/cdn0.vox-cdn.com/uploads/chorus_asset/file/5994889/Kirkland_Self_Driving_Lexus_Press_2.0.jpg)

This is where things get murky. The report suggests creating a legal system where robot liability is proportionate with autonomy — i.e., the more self-directed any system is, the more it assumes responsibility (as opposed to its human operator). But that just raises more questions, like how do you measure autonomy in the first place? And what if the self-learning system is learning from its environment? If a self-driving car is taught bad driving habits by its owner and crashes, is that still the manufacturer’s fault?

One way to side-step these problems, suggests the report, might be to create a mandatory insurance scheme for autonomous robots. If you make a robot (or the software that controls it), you pay into the scheme. If an accident happens, the hurt party then receives compensation from the fund. That way there’s less incentive for companies to try and dodge responsibility — they’ve already paid out the money they’ll give away.

WHAT NEXT?

It should be stressed that the report’s contents are only suggestions. It’s still in draft status and has yet to be passed on to the European Commission — the part of the EU that actually makes laws. When that happens, sometime in the next couple of months, the Commission can take notice of the recommendations (which sources say is most likely) and then start thinking up possible legislation. But this would be a long process, taking a year at least, with no way of saying if the report’s recommendations or wording would be heeded.

For the moment, though, we’re not in dire need of new legislation. Olaf Cramme, a tech and policy specialist at management consultancy Inline Policy, says the current system can cope with liability claims involving autonomous systems — just about.

“The tort system is very developed and there are a variety of laws that could apply in these cases,” Cramme tells The Verge. “But there are some fundamental problems. Accidents caused by self-driving vehicles, for example, will increase the complexity of any case.” Cramme says it’s probably better to draft new legislation for these scenarios, rather than clogging up the courts by forcing lawyers to follow ever-more-complex lines of liability. Insurance companies are open to this he says. “They’re excited because it should open new insurance models and new products which they can sell. It’s a great commercial opportunity.”

So: new laws are going to be needed, but we’re not sure what yet, and the concept of ‘electronic personhood’ might be too freighted with meaning to be politically useful. Change is going to come though, one way or another. “Robots are not science fiction,” says Delvaux. “These are not extraordinary beings attacking our world. It is technology that should serve us, and we need a realistic view of what is possible.”

Post comments (0)