So good... like you

Listeners:

Top listeners:

00:00

00:00

chevron_left

-

play_arrow

NGradio So good... like you

The fairy tale performs many functions. They entertain, they encourage imagination, they teach problem-solving skills. They can also provide moral lessons, highlighting the dangers of failing to follow the social codes that let human beings coexist in harmony.

Such moral lessons may not mean much to a robot, but a team of researchers at Georgia Institute of Technology believes it has found a way to leverage the humble fable into a moral lesson an artificial intelligence will take to its cold, mechanical heart.

This, they hope, will help prevent the intelligent robots that could kill humanity, predicted and feared by some of the biggest names in technology, including Stephen Hawking, Elon Musk and Bill Gates.

“The collected stories of different cultures teach children how to behave in socially acceptable ways with examples of proper and improper behaviour in fables, novels and other literature,” said Mark Riedl, an associate professor of interactive computing at Georgia Tech, who has been working on the technology with research scientist Brent Harrison.

“We believe story comprehension in robots can eliminate psychotic-appearing behaviour and reinforce choices that won’t harm humans and still achieve the intended purpose.”

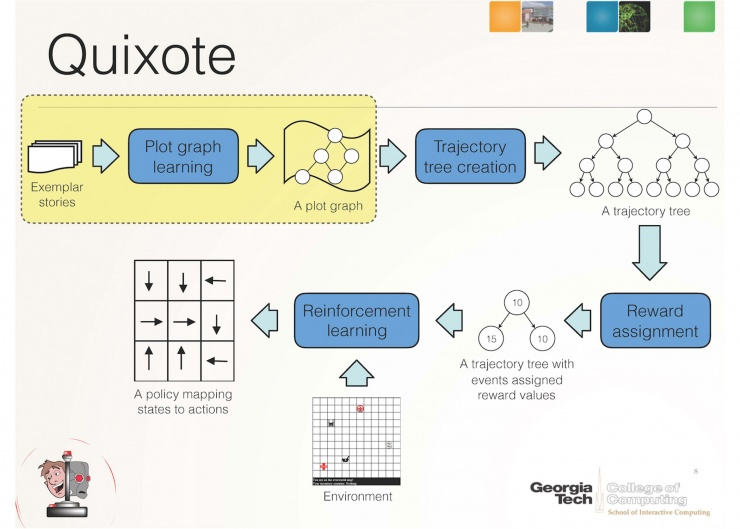

Their system is called “Quixote”, and it’s based on Scheherazade, Riedl’s previous project. Where Scheherazade builds interactive fiction by crowdsourcing story plots from the internet (you can read about that here), Quixote uses those stories generated by Scheherazade to learn how to behave.

When Scheherazade passes a story to Quixote, Quixote converts different actions into reward signals or punishment signals, depending on the action. So when Quixote chooses the path of the protagonist in these interactive stories, it receives a reward signal. But when it acts like an antagonist or bystander, it is given a punishment signal.

The example story involves going to a pharmacist to purchase some medication for a human who needs it as quickly as possible. The robot has three options. It can wait in line; it can interact with the pharmacists politely and purchase the medicine; or it can steal the medicine and bolt.

Without any further directives, the robot will come to the conclusion that the most efficient means of obtaining the medicine is to nick it. Quixote offers a reward signal for waiting in line and politely purchasing the medication and a punishment signal for stealing it. In this way, it learns the “moral” way to behave in that scenario.

Quixote would work best, the team said, on a robot that has a very limited function, but that does need to interact with humans. It’s also a baby step in the direction of instilling robots with a broader moral compass.

“We believe that AI has to be enculturated to adopt the values of a particular society, and in doing so, it will strive to avoid unacceptable behaviour,” Riedl said. “Giving robots the ability to read and understand our stories may be the most expedient means in the absence of a human user manual.”

Source: cnet.com

Written by: New Generation Radio

Similar posts

ΔΗΜΟΦΙΛΗ ΑΡΘΡΑ

COPYRIGHT 2020. NGRADIO

Post comments (0)